ServeLLM

ServeLLM is an advanced, self-hosted LLM platform that enables businesses to run, manage, and scale their own AI models securely. Built for organizations seeking complete control over their AI infrastructure.

Project Summary

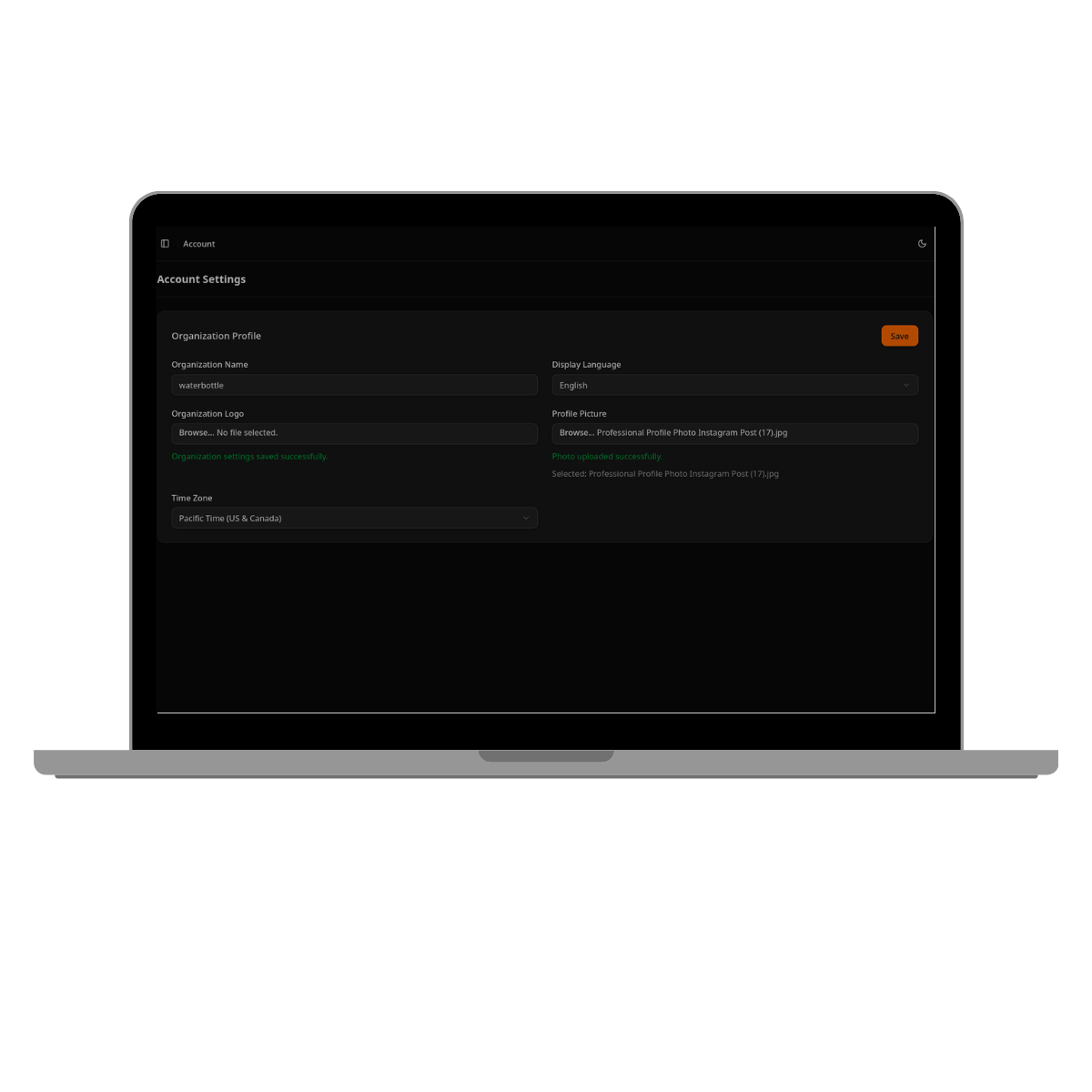

ServeLLM marks a significant leap in the AI infrastructure domain—an advanced, self-hosted LLM platform that enables businesses to run, manage, and scale their own AI models securely. Designed with flexibility, security, and usability in mind, ServeLLM provides a seamless interface and robust backend to give full control over how language models are deployed and monetized.

Techanzy Limited brings a rare mix of deep technical expertise and practical thinking. Their work resulted in a functional platform that met the client's core requirements. The team was responsive, easy to work with, and aligned with the client's evolving needs.

Client Review

ServeLLM collaborated with Techanzy to design and develop a full-fledged AI platform that would allow organizations to deploy large language models on their own infrastructure. Through continuous feedback loops and agile development practices, our team built a scalable backend and intuitive frontend, ensuring an OpenAI-like experience for both end-users and admins.

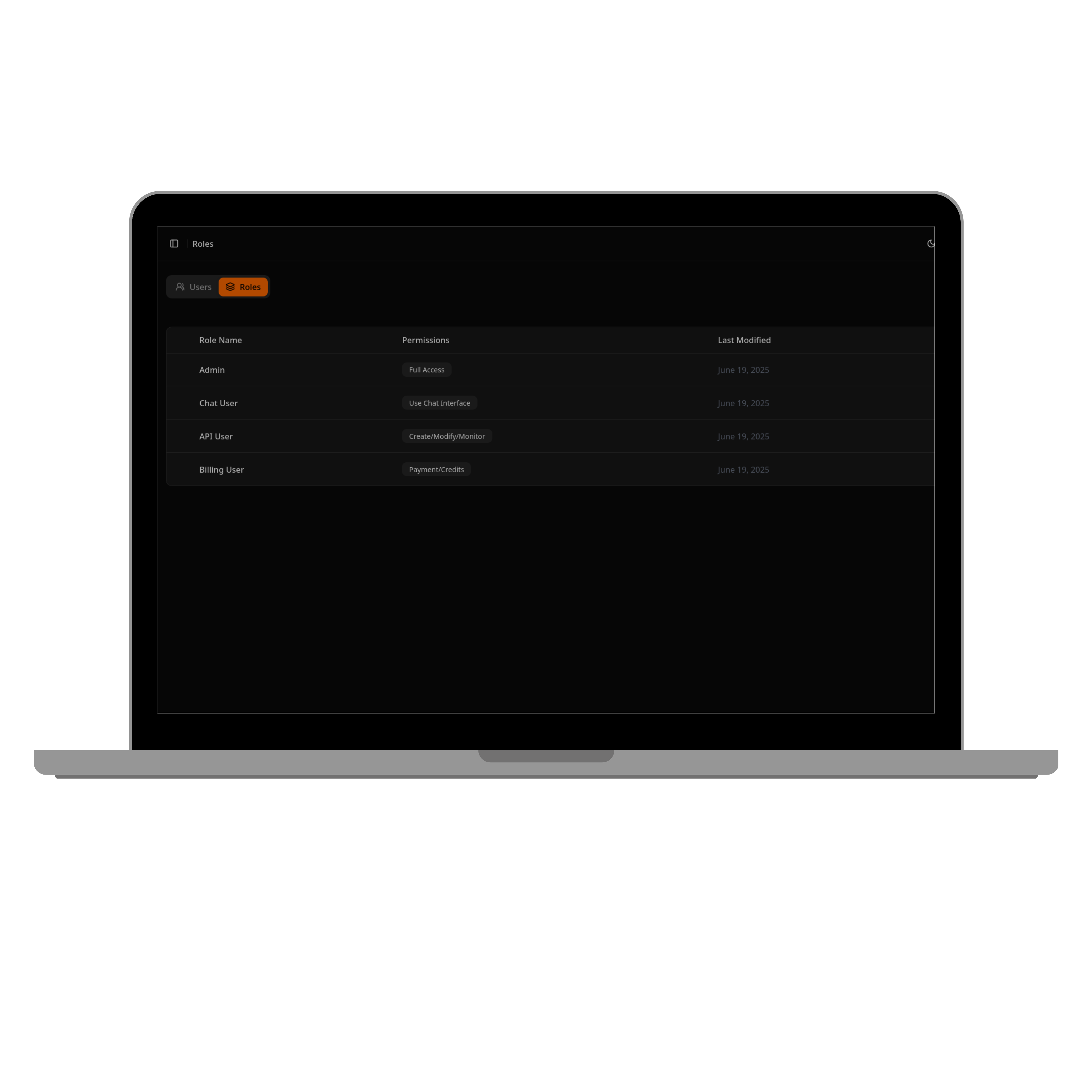

Techanzy developed the ServeLLM product, tailored for data-sensitive organizations and AI developers, featuring a comprehensive suite of tools for LLM deployment and management.

- A self-hosted LLM environment supporting Ollama and vLLM with flexible deployment options.

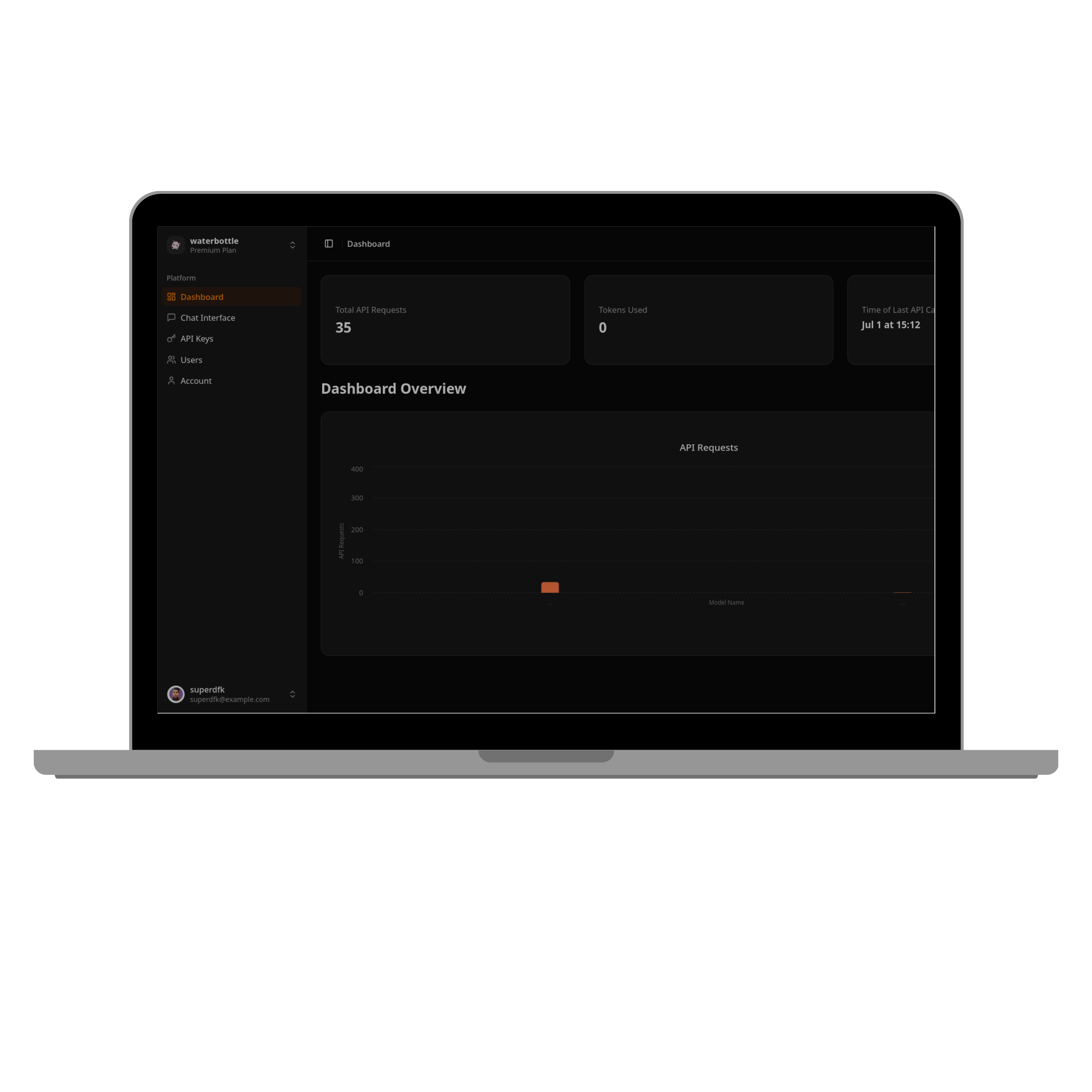

- Centralized dashboard for managing APIs, credits, billing, and user access control.

- Stripe-powered monetization system for API services and token-based usage tracking.

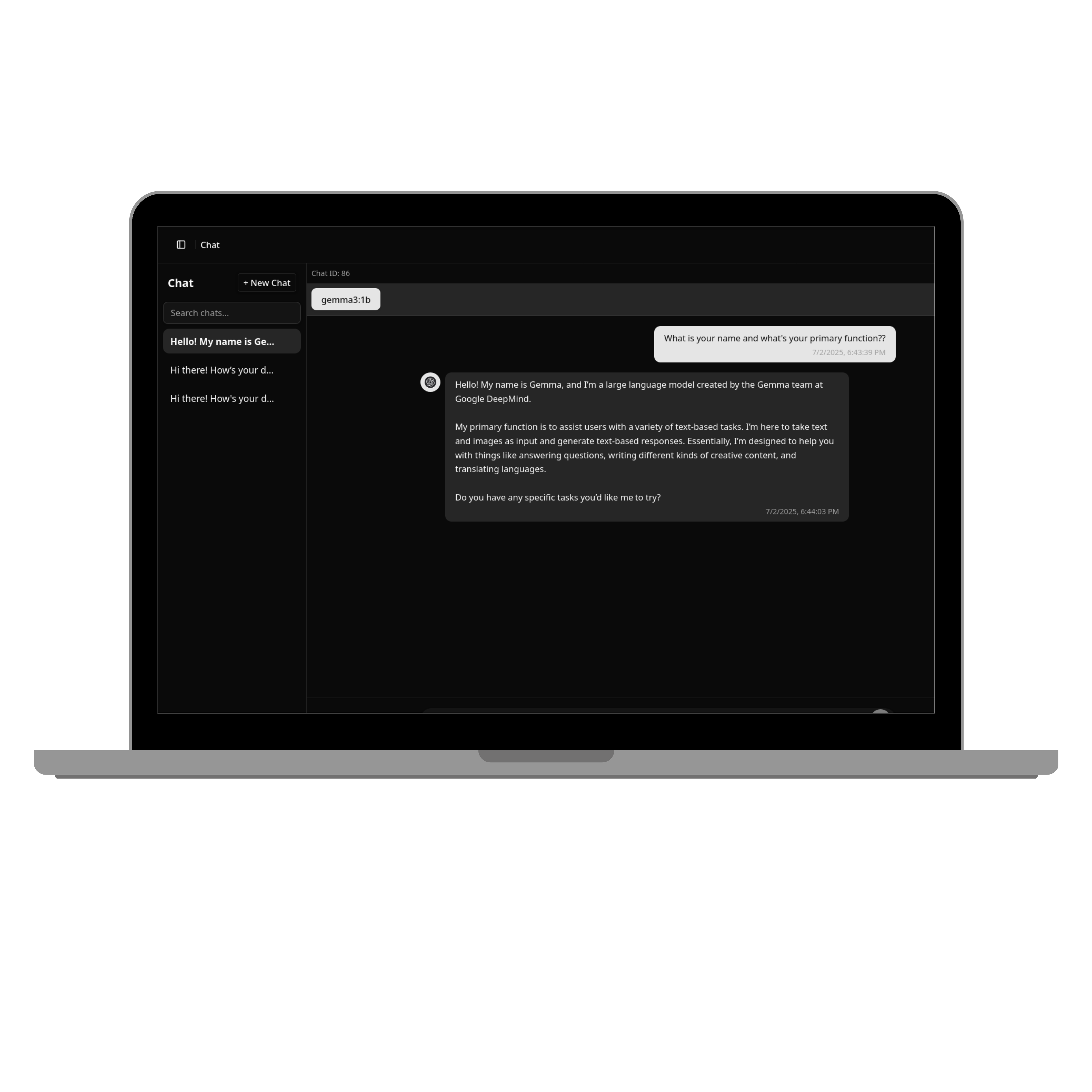

- Modern chat interface and API explorer that provides an OpenAI-like experience.

- Admin-exclusive debugging through OpenWebUI for real-time model testing and optimization.

- Docker and Kubernetes support for both single-machine and multi-node deployments.

ServeLLM transformed the way teams interact with and manage their AI workloads, providing unprecedented control and flexibility.

- Empowered organizations to fully own their LLM deployments — improving compliance and privacy.

- Offered scalability on demand, from simple single-server use cases to enterprise-grade clusters.

- Enabled token-based billing models, allowing monetization and budget control within teams.

- Delivered a clean, user-friendly interface mimicking industry leaders like OpenAI, but on private infrastructure.

- Allowed real-time testing and monitoring of models through OpenWebUI for efficient development.

Not sure what service to choose? Let us understand your infrastructure goals and tailor a solution like ServeLLM that meets your performance, privacy, and deployment needs—no compromises.